Hybrid Slowly changing dimension is a specific type of SCD which implements both Type1 and Type2 in the same dimension.

Business users in certain domains have requirement to analyse the data both with the current version and historic perspective in order to gain useful insights ,as we know the type 1 scd satisfies the analyse of the data for the current version and type2 scd enables to analyse the complete history of the data .

To be specific ,consider a customer data as shown below

I will take the customer Jimmy Adams to show the change of occupation using our demo ,Currently Jimmy Adams is from Cambridge and he is a customer of our imaginary company and his current occupation is student as shown above

Lets imagine that Jimmy Adams starts working and the occupation changes to working as shown below

Our requirement is to analyse the data with the current status and with the complete employment status history which is essentially type1 scd and type2 scd as sown below

I will be implementing this requirement with ssis 2012,I have created a ssis package ,the control flow of the package looks like below

It contains a for each loop container which loops through the text files in a folder and maps its location to a variable and i will be using that variable to assign to an expression of my customer source connection string in the data flow ,so that it enables to extract all the existing files of type txt in that folder

The for each loop container collection settings is shown below

The for each loop variable mapping is shown below

After the settings in control flow, we will go to the data flow and will create a flat file source and set the connection string of the flatfile adapter using an expression in properties window , the expression maps to our for each loop variable and gets the file location as shown below

To keep it simple i'm using inbuilt slowly changing dimension component,which i will explain later how to configure specific to our scenario.

The data flow starts by extracting the customer files ,than we add a copy column to create another column to meet our requirement of type 1 and type 2, as we need another column fo to hold the status of employment history,our data flow looks like below

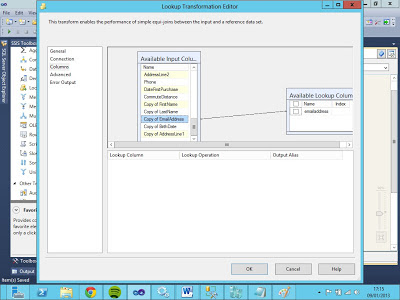

We will configure the columns including the newly created employment status column called Employment Status History in the scd wizard,double click the scd wizard connect to our destination customer dimension, map the source columns with the customer dimension columns and select the business key as shown below

We are not mapping the start date ,End State and Status Column as they are meta data columns,our customer dimension looks like below,please ignore the data in the diagram at this stage ,i will explain at the end

After the previous settings in scd wizard click next and configure the settings ,at this stage you need to determine which columns are of which type ,in my case except employment Status previous column all other columns are set to changing attributes which are of type1 and employment status previous column which we created earlier using our copy column is set to a historic attribute to make it type 2,the settings are shown in below image

After the previous setting, click next in the wizard and make the settings as shown below for changing attributes, by clicking the check box in this wizard , we are telling scd component to update all the changing attributes (type1) to the current latest value as shown below

Click next in the wizard and make the settings for our historic attribute ,Previous employment status column is the only column we mapped for historic attribute previously ,we will be using the meta data columns Start Data,End Date and System time variable to manage the type 2 changes and i will not go through the details of this as there are lot of article on the net which explain the details.The adavantage of the time stamps start date and end date is it allows us to query the status of the employment at any point in time .The below image shows the settings which we described

Click next and accept the default setting for inferred members configuration, as we are not implementing any inferred members ,and finally click next to finish the wizard configuration .

After we run the package with the source data for the first time as shown before ,our dimension will be loaded with the customer attributes as shown below,currently the employment status current and employment status previous are same ,as we haven't got any changes as of now

We will insert a new record for Customer JimmyAdams now with new employment status working as shown below

Now will run the package again,and it will insert a new record and updates the end dates of the previous record for jimmy adams as shown below.

As shown above the current employment status of Jimmy Adams is updated to current values which in our case contains updated working status value and previous status column contains all the values which include student and working also the previous expiry date is shown in end date column